Best Practices for TargetX Recruitment and Retention

Best Practices for TargetX Recruitment and Retention

- Identify users for the Student Success Center based on the Recruitment phase. Determine whether new users must be added to the system and ensure full Salesforce licenses are provided.

- Determine the data that must be loaded to support Flags, Alerts, and Kudos. Planning for your data needs allows you to utilize all the features of the Recruitment Suite. Example: You will need LMS data to bring in "last date of attendance" or "grade" data to trigger a flag/alert.

- If you are concerned about the number of Salesforce licenses you may need, consider creating a FormAssembly form for Faculty to submit Early Alerts or Kudos without having to log in to the TargetX CRM.

- Prepare legacy data before your project to ensure data files are ready for conversion. This will likely require you to work with your previous vendor, and it often takes time to work through details.

- When building reports for multiple objects, create filters on the child object, not the master/parent object.

For example, if you are writing a report with a Report Type of 'Contacts with Applications' using the field 'Student Type' as one of the filters, then you should use the 'Student Type' field from the child object (Application), and not the 'Student Type' field from the parent object (Contact).

- The TargetX Retention Product should be a one-way, periodic integration from the Student Information System / Learning Management System. Any actions taken within the Student Success Center or any other part of the TargetX Retention Product would not be returned to the SIS/LMS. A bi-directional integration is more difficult to maintain than a one-way integration. Moreover, the SIS/LMS are the official data sources for Grades, Holds, etc. The data surrounding retention in TargetX should simply be a mirror of SIS/LMS Data. The Student Success Center should be considered as an engagement tool for current students, not a place to create official data on course grades, holds, etc.

- Take advantage of Salesforce automation and formulas for repeatable tasks, emails, field calculations, status changes, etc. as this allows users to focus on recruiting instead of managing the system.

- To use Address Plus, Contact records must be assigned the Recruitment Manager Student Record Type and five-character zip codes, NOT the Plus 4.

- Prospect Scoring criteria should not be based on an internal user’s (counselor/staff) actions. Scores should be based on the prospect's interaction with the school.

- Profile Builder: Source fields and Target fields must be the same field data type. e.g., Text (text formula, picklist, string), number (number string, formula number), boolean. If you need to roll up a field that does not match the field type in the target object/field you can create a formula field on the source object to translate that data and use that formula field as your new source field. Mismatched data types will break the rollups for that object.

- Do data cleaning periodically (Tasks/Activities, Email Templates, Checklist Items, Application Reviews, Application Form Histories, Period Deadlines, Academic Periods, and other old records). Consider using DemandTools periodically after each recruiting cycle to remove old data. Data storage limits can be avoided if old data is purged periodically.

- When building condition sets, use only 2 or 3 fields per condition set to minimize complexity. If condition set logic becomes too complex, consider using a formula field to output all possible valid combinations so that the condition set may only need one criterion using the formula field.

- Using fields with many picklist values, such as Program, may result in too many condition sets to manage. Try using broader categories such as Department or College to group populations for easier maintenance. Using too many criteria within condition sets could breach the JSON character limits, resulting in a non-functioning portal or emails.

- Schedule high-level reports to email stakeholders who are normally not CRM users:

- Any person without a Salesforce license can receive relevant updates via scheduled Reports.

- Scheduling reports could also notify CRM users when data needs to be cleaned.

- Make sure that your Call-to-Action items are located at the top of your home (first) page. Do not force your portal users to scroll past content or click into another page to see the action items. For example, place the Start Application button near the top of the first page.

- Before deploying a Survey, consider first linking collected data back to the contact record for better future reporting. However, if there are ethical reasons to keep the survey anonymous, then deploy the survey link to collect data anonymously. Data collected and linked to the contact can be used to make more targeted future email campaigns and deeper enrollment analyses.

- Keep flags (or visualizations) to a maximum of TWO per object. For example, construct one flag indicating current academic standing and one for proximity using the home address on the contact record. Ensure the flags are located at the top of each object's page layout for easy indication. Too many flags per object clutter the layout with an overload of information. The purpose of a flag is to aggregate data to give users an easy indication of the status of a particular record without digging into too many fields.

Best Practices for TargetX Recruitment and Retention: End-Users

- Log follow-up calls on tasks while you are on a call with a student to make sure you call them back when you say you will. This will help you stay organized and build credibility with the student.

- Where possible, use automated task/activities to organize recurring and non-recurring to-dos. Consider using Workflows to create tasks that are based on clear criteria. It is good habit to create tasks for non-recurring items that need to be completed.

- Create your own Reports and Dashboards for the records/populations for which you are responsible. This will make it easier to retrieve the data relevant to you and minimize the need to reach out to other resources for information.

Faculty Early Alerts and Cohort Scoring Best Practices

Establish a Pilot Process

Rolling out a new Faculty Alerts process may have challenges, like, “How do we get faculty to support the process?” or even, “What type of alerts and kudos should faculty issue, and when?” To help answer these questions, it is recommended that your institution, if they haven’t already, identify a cohort of faculty to pilot the initial rollout of the Faculty Early Alerts. This cohort can be select faculty from a certain campus or even faculty within a given department/division.

Research has shown that faculty alerts can be impactful, but it’s also the reality that folks want to see this type of data within their own institution before they agree to participate. Selecting a cohort to test pilot the use of Faculty Early Alerts will do several important things:

-

Data: The collection of data is now underway. There are several data points that can be collected and utilized.

- Student Interventions/Support Services: The minute faculty start issuing out alerts or kudos, the institution can track the types of “at-risk” students and identify the interventions taken to support their success.

- Student Progress/Success: On the flip side, it can also show if the use of kudos is encouraging students and providing them additional confidence to be successful in the course and throughout the overall semester.

- Faculty Logins: Faculty usage is other data point your institution will want to collect. Utilizing data from the faculty during the pilot process can help in determining the future number of logins and a number of licenses needed for an academic year. Connect with your TargetX AE for guidance on license types

If the data lives in Salesforce, your institution can use it! This is the opportunity to look at the data points and establish institution retention and student success goals.

- Faculty Input: By selecting a specific cohort of faculty to participate, your institution can obtain additional input from faculty that will help develop the general rollout. Your institution can establish an FAQ document to answer frequently asked questions from faculty in the pilot program and determine if the right alerts and kudos were utilized. This type of faculty input can be vital to internally establish faculty/user adoption of the new tool.

- Advisor Input: Similar to capturing faculty input, advisor input is equally important. From here, you can see if the internal processes and interventions established by the institution once a faculty alert is issued - is working. Advisors can help identify which alerts or kudos they’re seeing more frequently - allowing your institution to be proactive in offering support services to students in upcoming semesters. Advisor input also allows you to establish additional user adoption of the new tool.

This same type of piloting process is recommended for the use of Cohort Success Scores, which is available at no additional licenses for current Student Success/Retention users. More information on this can be found in the “Development of Success Scoring to Support Consistent Advising” section of this document.

Define Terminology & Potential Early Alerts

Research shows that “early alert systems allow educators to systematically monitor student performance and intervene when academic challenges arise” (Harris, III, et al., 2017, p. 28). There has often been debate on when the early alert system should occur, but as the researcher’s reference, it needs to happen at the start of a semester and not at the halfway point of a course. As the researchers state, “The goal of an early alert system is to intervene with these support services to curb challenges students are facing while there is still time to change the trajectory of their success in a given class” (Harris, III, et al., 2017, p. 28).

Based on research and industry best practices, TargetX makes the following recommendations:

Recommended Definition of an Early Alert: An early alert should occur, during a 15-week semester, between weeks 2 and 3. The alert options for faculty to utilize should be minimal and consistent. Below are examples of potential alerts/kudos.

- Academic Performance Early Alert - This alert would be used during the early alert time frame. This alert will generate an email to the student to encourage them to seek tutoring for the course.

- Missed Work Early Alert - This alert should be used in the early alert timeframe. This alert will generate an email to students struggling in a course because of a failure to turn in assignments rather than the quality of the work.

- Non-Academic Struggles Early Alert - This alert should be utilized during the early alert timeframe when a faculty member feels there may be non-academic struggles a student is facing. This could include food or housing insecurities, daycare needs, or technology needs.

- Unsatisfactory Attendance: No Longer Attending - This alert should be utilized for a student who has missed a significant amount of their course time over the past two to three weeks (online or face-to-face) and is no longer attending the course. Students would be encouraged to withdraw from the course if they cannot make up the missed coursework. An automatic email notification will be sent to the student.

- Never Attended Class - This alert should be utilized if a student has never attended the course. This may be part of the decertification process required within the first two weeks of the semester.

- Excellent Work - This alert should be utilized for students who, within the first two to three weeks of the course, have demonstrated commitment to the course and are doing outstanding work on assignments.

In addition, the Faculty should be able to raise alerts or give kudos during the term period as a feedback mechanism for the students and inform the advisors who are working with the students.

Development of Success Scoring to Support Consistent Advising

Understanding a specific cohort of students and developing a plan to support their success can often be challenging. To help in this process, identifying criteria around specific cohorts, like adult students, first-generation students, and underserved students being recommended by using the TargetX Cohort Success Scores feature.

Cohort Success Scores will allow your institution to configure scores for any cohorts of students based on applicable risk/success factors (Success Score Rules), including the data generated by Faculty Alerts and alerts raised by advisors and other staff. Once students are scored, advisors can more easily direct their efforts toward students who need their attention.

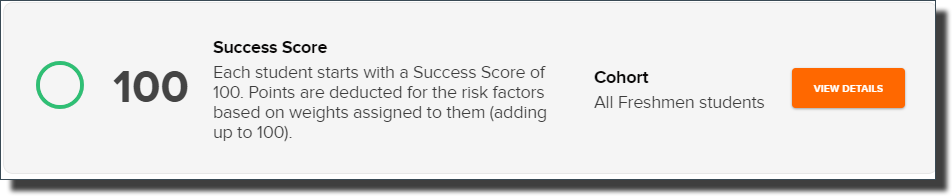

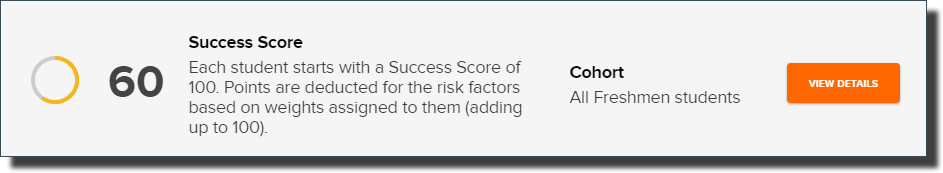

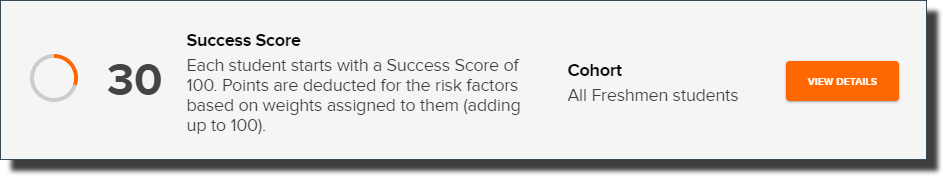

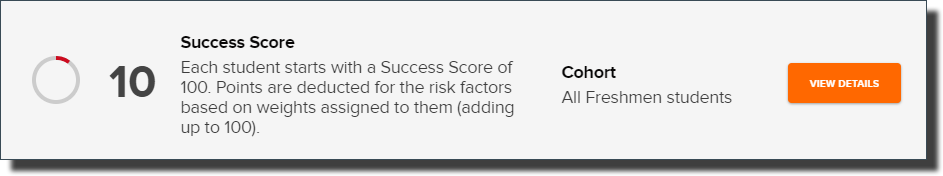

Each student starts with a Success Score of 100, and points are deducted based on Success Score Rules. The rules can be configured based on numerous categories of data:

- Academic

- Engagement

- Financial

- Demographic

- Behavioral

- Overall Number of Issued Alerts

Each above category has numerous rules that get weighed into the 100 points. For example, under Academics, if a student’s cumulative GPA is less than 2.25, they may have a deduction of 30 points. Additionally, if an Engagement success score review of “ In the previous term, the total number of Appointments attended with any advisor is less than 2” the student has an additional 25 points deducted. Add to the fact the student is a first-generation student, so an additional 15 points are deducted. In this scenario, the student would have a Success Score of 30.

The Success Scores are displayed on a Student’s Contact record and in the Advisors Student Success Center with an associated color:

- 0-25: High Risk (Red)

- 26-50: Med Risk (Orange)

- 51-75: Low Risk (Yellow)

- 76-100: Likely to Persist (Green)

Likely to Persist

Low Risk

Medium Risk

High Risk

Your institution will be able to create unique settings for each of the above categories, based on the specific cohort. As the examples above show, an Advisor will quickly identify a student at risk and view the details associated with the scoring - determining if it is more around academics or more focused on financial and overall engagement. Based on this determination the type of actions can be taken in guiding the students.

- Start with a select group of Cohorts before creating too many. Less is more.

- Students will be assigned to the first Cohort that applies to them, so make sure you check the rank order of the Cohorts is correct.

- Use different scoring criteria to address different student populations.

- For freshmen you may want to look at their highschool grades, but for seniors you already have 3 years of their college grades and GPA.

- Enable Cohort Based Success Scores in Student Success Center (SSC).

- Sample Cohorts:

- First Generation Freshman Students

- STEM Majors

- Freshman Students

- Sophomore Students